k8s

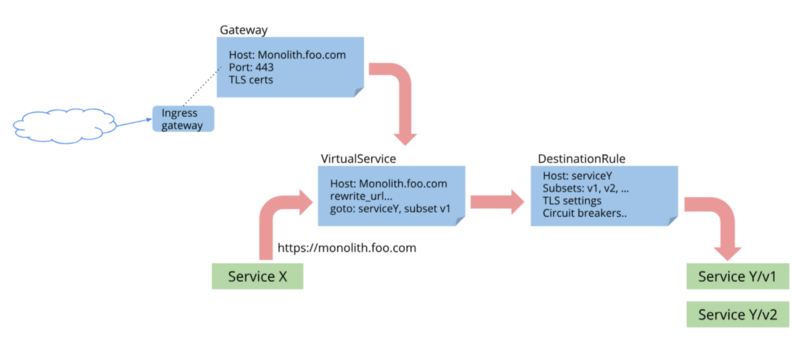

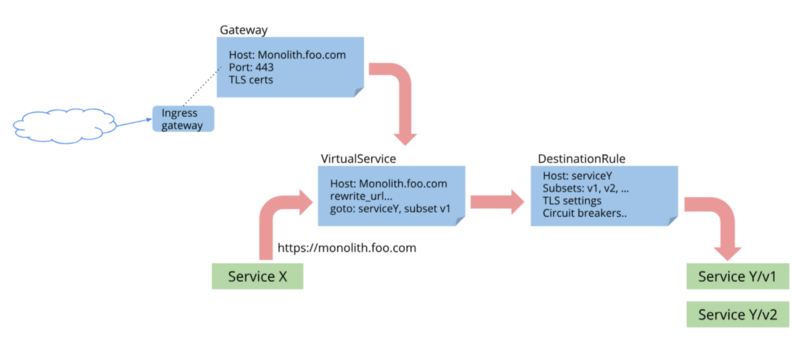

I’ve been following the news about istio since it’s first alpha release in 2017. I think this project has a great future, because it solves a lot of pain points in the microservice based architecture, like auth, observability, fault-injection, etc.

But the fact is, I never actually tried it. Prior to v1.0 there were too many bugs and limitations to put it into production. So, when they finally released “production-ready” version I got quite exciting.

How to deploy multi-arch Kubernetes cluster using Kubespray I recently bought 3 ODROID-HC1 devices to add a dedicated storage cluster to my home Kubernetes. I thought that it’s a good excuse to spend some time redeploying the cluster. Usually, I would’ve gone with CoreOS, since I’m a big fan of their immutable OS. Unfortunately, that is not an option if you have ARM nodes. So I had to choose between manual provisioning and Ansible.

Home Lab Infrastructure Overview Every software or technology I blog about usually goes through my home lab first. A lot of people usually got surprised when they first hear that I have a multi-node Kubernetes cluster at home. It usually takes some time to tell them about all the machines and networking. Of course, not accounting for the time spent answering the question “why do you need it”. I added a few new devices and reconfigured everything from scratch recently.

A few days ago when I tried to install helm chart in my Kubernetes cluster I noticed that all new pods that required storage were in pending state. After a quick check of the logs, I found out that pods were unable to get PVC from GlusterFS. I recently wrote about my experience deploying GlusterFS cluster. This time I will go through recovering data from the broken GlusterFS cluster, and some problems I faced deploying new cluster.

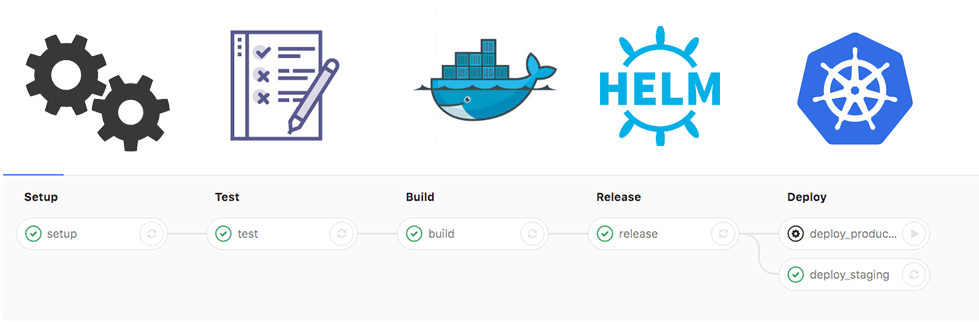

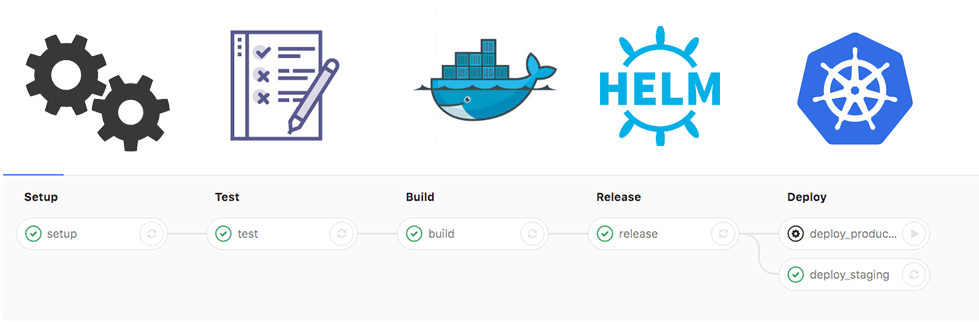

Since my previous posts[1][2] about CI/CD, a lot have changed. I started using Helm for packaging applications, stopped using docker-in-docker in gitlab-runner.

Recently, I started working on a few Golang microservices. I decided to try gitlab’s caching and split the job into multiple steps for better feedback in UI.

Few of the main changes to my .gitlab-ci.yaml file since my previous post:

no docker-in-docker using cache for packages instead of a prebuilt image with dependencies splitting everything into multiple steps.