How to run Functions in your Kubernetes cluster

How to run Serverless Functions in Kubernetes cluster.

Serverless or Function as a Service

What is Serverless/FaaS?

Serverless is a new paradigm in computing that enables simplicity, efficiency and scalability for both developers and operators.

For a long time, there was only one FaaS implementation available for Kubernetes - Funktion. Which I found a bit complicated and tightly coupled with the fabric8 platform. They claim to support functions in any language, but I found only java and nodejs examples in the repo. Since I’m mostly writing on Python and Golang it’s not very useful for me.

From time to time I was googling for other implementations. Finally, at the end of the last year, I read about IronFunctions. It’s written on Golang, and really supports functions in any language. So, I decided to try it out.

Deploying Functions platform

Deploying IronFunctions platform is pretty straightforward. Official repository has docs describing deploy to Kubernetes, Swarm and AWS Lambda. Kubernetes manifests provided by IronFunction is split into two folders — testing and production. First one deploys single pod with all needed services encapsulated in it. The production one deploys PostgreSQL, Redis and Functions separately. For this post I’m going to use kubernetes-quick manifests.

git clone https://github.com/iron-io/functions

cd functions/docs/kubernetes/kubernetes-quick

kubectl create -f .

After successful deploy, we can access IronFunctions in two ways: by using CLI or cURL. I’m going to use CLI for all commands in this post. You can find corresponding REST calls in the documentation.

First, let’s install CLI and test it out.

curl -sSL http://get.iron.io/fn | sh

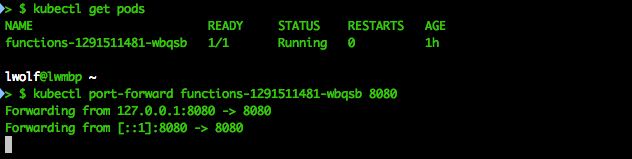

By default, CLI expects that functions platform is available on localhost:8080. So we need to either use port-forwarding or tell it to use another address:port. I’m going to use port-forwarding.

If you can’t use port-forwarding, you should set environment variable IRON_FUNCTION. And point it to your deployed functions service:

export IRON_FUNCTION=<YOUR_KUBERNETES_NODE>:$IRON_PORT

To test it out let’s try to list created applications.

> $ fn apps list

no apps found

Creating our first function

Now we are ready to write our first function. For the purpose of this post, we’re going to write a simple python function to post messages to Slack channel. You will need to get token for this.

Let’s create func.py

import sys

sys.path.append("packages")

import os

import json

from slacker import Slacker

if not os.isatty(sys.stdin.fileno()):

obj = json.loads(sys.stdin.read() or '{}')

if all([x in obj for x in ['token', 'channel', 'message']]):

slack = Slacker(obj['token'])

slack.chat.post_message(obj['channel'], obj['message'])

It’s based on one of examples provided by IronFunction. You can find a lot more of them in the repository.

Next, we need to create func.yaml. This is the manifest which is used to actually build and deploy function. For Golang it could be generated by running fn init repo:name.

app: slackfn

name: lwolf/fn_python

version: 0.0.1

build:

- rm -Rf packages/

- docker run --rm -v "$PWD":/worker -w /worker iron/python:3-dev pip install -t packages -r requirements.txt

and Dockerfile

FROM iron/python:3.5.1

WORKDIR /function

ADD . /function/

CMD python func.py

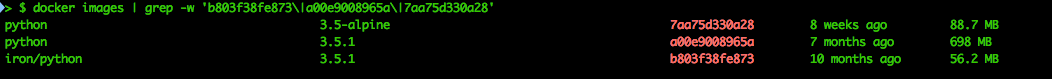

I decided to use python image from IronFunction because it’s the most lightweight of all. And when dealing with functions — size really matters. Since its almost impossible to reduce python images below 50-60mb, it really makes sense to write functions in Golang. Here is an awesome post about building production golang containers with the size around few megs.

Now let’s build and push our function.

# build the function

> $ fn build

# test it

> $ fn run

# push it to Docker Hub

> $ fn push

# create an app

> $ fn apps create slackfn

slackfn created

# create route

> $ fn routes create slackfn /sayit

/sayit created with lwolf/fn_python:0.0.1

And let’s test that it works.

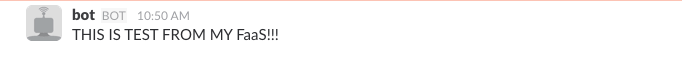

> $ echo '{"token":"<TOKEN>", "channel":"#testing", "message":"THIS IS TEST FROM MY FaaS!!!"}' | fn call slackfn /sayit

Looks good, but sending token each time is not very usable.

Configuring application

IronFunctions provide a way to configure both application and route. It should be possible to set and unset config variables on existing apps, but, for some reason, it didn’t work for me. So I’m going to delete and create my application.

# delete routes first

> $ fn delete routes slackfn /sayit

# delete application

> $ fn apps delete slackfn

# now let's create new application and set our slack token in config

fn apps create --config TOKEN=<TOKEN> slackfn

Here we go. Now let’s change our function code to get token from the config. Settings will be available as environment variable prefixed with an underscore.

import sys

sys.path.append("packages")

import os

import json

from slacker import Slacker

if not os.isatty(sys.stdin.fileno()):

obj = json.loads(sys.stdin.read() or "{}")

token = os.environ['_TOKEN']

if all([x in obj for x in ['channel', 'message']]):

slack = Slacker(token)

slack.chat.post_message(obj['channel'], obj['message'])

Let’s check it out.

# bump version of container

> $ fn bump

# build new container

> $ fn build

# push new container to the registry

> $ fn push

# add new route

> $ fn routes create slackfn /sayit

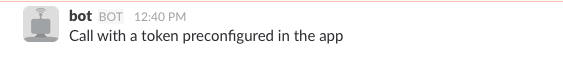

Now if we call our endpoint without token it should still work:

> $ echo '{"channel":"#testing", "message":"Call with a token preconfigured in the app"}' | fn call slackfn /sayit

This looks better, but let’s add another level of flexibility. Let’s create endpoints with preconfigured channel names. First, let’s change our code again. This is the final version.

import sys

sys.path.append("packages")

import os

import json

from slacker import Slacker

if not os.isatty(sys.stdin.fileno()):

obj = json.loads(sys.stdin.read() or "{}")

token = os.environ['_TOKEN']

channel = os.environ['_CHANNEL']

if all([token, channel, obj['message']]):

slack = Slacker(token)

slack.chat.post_message(channel, obj['message'])

And bump/build/push new container and create new routes

# bump version of container

> $ fn bump

# build new container

> $ fn build

# push new container to the registry

> $ fn push

# create 2 routes with preconfigured channel names

> $ fn routes create --config CHANNEL=#mychannel1 slackfn /sayit/chan1

> $ fn routes create --config CHANNEL=#mychannel2 slackfn /sayit/chan2

That’s it. Now we have a working serverless application in our Kubernetes cluster. It’s pretty simple, but shows some of the basic features. The only way to update route or application is by deleting and recreating it which is a bit annoying. And I think it will be fixed soon.

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Email